It is the bedrock of my understanding of the world around me. I think it applies not only to every other human but also to every other living thing which has any semblance of cognition.

Any “thing” which exists, can not, at the same time, be “some other thing”. A thing may be identical in properties to some other things but the various things each remain uniquely identified in space- time.

Claims of violation of this uniqueness (by some proponents / propagandists of quantum theory) are just Edward Lear types of nonsense physics. Statements about single particles existing simultaneously in different spaces or times are using a nonsense definition of existence. Nonsense mathematics (whether for quantum entanglement or time travel or the Ramanujam summation) is as nonsensical as the Dong with the luminous nose. Nonsense is nonsense in any language. No matter.

There are – it is said – “1078 to 1082 atoms in the known universe”. Of course this statement is about the 5% of the known universe which is matter. It is silent about dark energy and dark matter which makes up the remaining 95%. No matter. It is entirely silent about what may or may not be in the universe (or universes) that are not known. No matter.

… roughly 68% of the universe is dark energy. Dark matter makes up about 27%. The rest – everything on Earth, everything ever observed with all of our instruments, all normal matter – adds up to less than 5% of the universe.

Nevertheless, every known atom in the known universe has a unique identity – known or unknown.

In a sense, every atom in the universe can be considered to have its own identity. Atoms are the basic building blocks of matter and are distinguished by their unique combination of protons, neutrons, and electrons. Each element on the periodic table is composed of atoms with a specific number of protons, defining its atomic number and giving it a distinct identity. For example, all hydrogen atoms have one proton, while all carbon atoms have six protons. Moreover, quantum mechanics suggests that each individual atom can have its own unique quantum state, determining its behavior and properties. This means that even identical atoms can be differentiated based on their quantum states.

It has been guesstimated that there may be roughly 1097 fundamental particles making up the 1078 to 1082 atoms in the known universe. These particles too, and not only atoms, have unique identities.

In fact, every fundamental particle has its own separate identity. Fundamental particles are the smallest known building blocks of matter and are classified into various types, such as quarks, leptons, and gauge bosons. Each fundamental particle has distinct properties that define its identity, including its mass, electric charge, spin, and interactions with other particles. For example, an electron is a fundamental particle with a specific mass, charge of -1, and spin of 1/2. Furthermore, in quantum mechanics, each particle is associated with its own unique quantum state, which determines its behavior and properties. These quantum states can differ even among particles of the same type, leading to their individual identities.

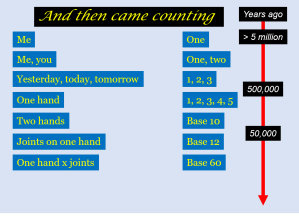

It is the concept of the existence of things, each having a unique identity, which allow us to define a concept of one (1, unity). The concept of identity and the concept of one are inseparable. It is the concept of one which leads to all numbers. The concepts of identity and of “one” are inseparable in both the philosophical and spiritual contexts. But the notion of identity ultimately emerges from the fundamental interconnectedness of the existence of things. And this creates the paradox. Things have separate identities but have the same kind of existence. It is the parameters of the existence which exhibit oneness. But this oneness of existence negates the separate, unique identity of existing things.

Unique identity of existing things gives the concept of one (1) whereas a unifying oneness eradicates what is unique and kills identity.

Identity and oneness can be seen as interconnected in certain philosophical and spiritual perspectives. While they may appear contradictory at first, a deeper exploration reveals a nuanced relationship between the two. Identity refers to the distinguishing characteristics and qualities that make something or someone unique and separate from others. It is the sense of individuality and selfhood that we associate with ourselves and the objects or beings around us. Identity implies a sense of boundaries and distinctions, where each entity is defined by its own set of attributes and properties. On the other hand, the concept of oneness suggests a fundamental unity or interconnectedness that transcends individual identities. It suggests that all things and beings are ultimately interconnected, part of a larger whole or cosmic unity. This perspective emphasizes the underlying unity of existence, where the boundaries and distinctions that define individual identities are seen as illusory or superficial.

In some philosophical and spiritual traditions, the concept of oneness is understood as the ultimate reality or truth, while individual identities are considered temporary manifestations or expressions of this underlying oneness. From this viewpoint, individual identities are like waves in the ocean—distinct for a time but ultimately inseparable from the ocean itself. However, it’s important to recognize that these concepts can be understood and interpreted differently across various philosophical and spiritual frameworks. Some may place more emphasis on the individual identities and the uniqueness of each entity, while others may emphasize the interconnectedness and unity of all things. Ultimately, whether one considers identity and oneness as inseparable or not depends on their philosophical, spiritual, or cultural perspectives. Both concepts offer valuable insights into understanding our place in the world and our relationship with others, and exploring the interplay between identity and oneness can lead to profound philosophical and existential contemplations.

This perspective can be found in various philosophical and spiritual traditions, certain forms of mysticism, and some interpretations of quantum physics. It suggests that the perceived boundaries and separations that define individual identities are illusory, and the true nature of reality is the oneness that transcends these apparent divisions. Identity and one (1) could well be illusory just as all of numbers and the mathematics which follow are not real.

However, it’s important to note that not all philosophical or spiritual perspectives hold this inseparability between identity and oneness. Other perspectives may emphasize the importance of individual identities, personal autonomy, and the uniqueness of each entity. As with any philosophical or metaphysical concept, the relationship between identity and oneness can be a matter of interpretation and personal belief. Different perspectives offer diverse insights into the nature of reality, and individuals may resonate with different understandings based on their experiences, cultural backgrounds, and philosophical inclinations.

Where numbers come from