Selective breeding works.

Humans have applied it – and very successfully – for plants and animals since antiquity.

There is nothing “wrong” conceptually with eugenics for the selective breeding of humans. But the Nazis – and not only the Nazis – brought all of eugenics into disrepute by the manner in which they tried to apply the concept. Because of the Nazis and the coercive treatment of some minorities in Europe and of the Aborigines in Australia where forced sterilisation, forced abortions, genocide, euthanasia and mass murder were used to try and control the traits of future generations, eugenics has come to be inextricably associated with the methods used. Even in more recent times genocide, mass rapes and mass murder have been evident even if not openly for the purpose of controlling the genetic characteristics of the survivors.

I note that evolution by “natural selection” does not intentionally select for any particular traits. Surviving traits are due to the deselection of individuals who have not the wherewithal to survive until reproduction. Natural Selection in that sense is not pro-active and evolution is merely the result of changing environments which causes individuals of a species who cannot cope with the change to perish. Evolution has no direction of its own and is just the result of who survives an environmental change. It is not not some great force which “selects” or leads a species into a desired future. Species fail when the available spread of traits and characteristics among the existing individuals of that species is not sufficient to generate some individuals who can survive the environmental change. Natural Selection is therefore not an intentional selection process but represents the survivors of change. Of course, not all traits have a direct influence on survival. All “collateral” traits are carried along – coincidentally and unintentionally – with those traits which do actually help survival in any particular environment. But as conditions change what was once a collateral trait may become one which assists in survival.

As breeding techniques go, “Natural Selection” relies on a wide variation of traits throwing up viable individuals able to cope no matter how the environment changes, while “Artificial Selection” chooses particular traits to promote but runs the risk of unwanted collateral traits showing up (as with some bulldogs unable to breathe or with the development of killer bees). Natural selection is the shot-gun to the rifle of artificial selection. The shot gun usually succeeds to hit the target but may not provide a “kill”. But the rifle usually kills but it could easily miss or even kill the wrong target!

Of all the babies conceived today about 1% are conceived by “artificial” means (IVF or surrogacy) and include a measure of genetic selection. Even the other 99% include a measure of partner selection and – though very indirectly – a small measure of genetic selection. A significant portion (perhaps around 20%?) are through “arranged” marriages where some due diligence accompanies the “arrangement”. Such due diligence tends to focus on economic and social checks but does inherently contain some “genetic selection” (for example by excluding partners with histories of mental or other illnesses in their families). If eugenics was only about deliberate breeding programs seeking particular traits then we would not be very far down the eugenics road. But more importantly around 20-25% of babies conceived are aborted and represent a genetic deselection. As a result, a form of “eugenics by default” is already being applied today.

(The rights and wrongs of abortion is another discussion which – in my opinion – is both needless and tainted. Abortion, I think, is entirely a matter for the pregnant female and her medical advisors. I cannot see how anybody else – male or female – can presume to impose the having or not having of an abortion on any pregnant person. Even the male sperm donor does not, I think, warrant any decisive role in what another person should or should not do. No society requires that a female should get its approval for conceiving or having a child (with the exception of China’s one-child policy). Why then should not having a child require such approval? While society may justifiably seek to impose rules about infanticide, abortion – by any definition – is not the same as infanticide. Until the umbilical is severed, a foetus is essentially parasitic, totally dependent upon its host- mother and not – in my way of thinking – an independent entity. I cannot and do not have much respect for the Pope or other religious mullahs who would determine if I should shave or not or if a woman may or may not have an abortion).

Consider our species as we breed today.

In general the parents of children being conceived today share a geographical habitat. Apart from the necessity – so far – of the parents having to meet physically, it is geographical proximity which I think has dominated throughout history. Victors of war, conquerors, immigrants, emigres and wanderers have all succumbed to the lures of the local population within a few generations. In consequence, partners often share similar social and religious and ethnic backgrounds. But the geographical proximity takes precedence. Apart from isolated instances (Ancient Greece, the Egypt of the Pharaohs, the persecution of the Roma, European Royalty, Nazi Germany and the caste-system on the Indian sub-continent), selective breeding solely for promoting or destroying specific genetic traits has never been the primary goal of child-bearing. Even restrictive tribes where marrying outside the “community” (some Jews and Parsis for example) is discouraged have been and still are more concerned about not diluting inherited wealth than any desire to promote specific genetic traits.

But it is my contention that we are in fact – directly and indirectly – exercising an increasing amount of genetic control in the selection and deselection of our offspring . So much so that we already have “eugenics by default” being applied to a significant degree in the children being born today.

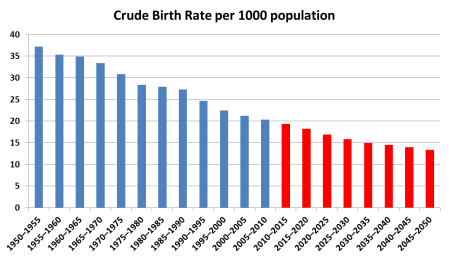

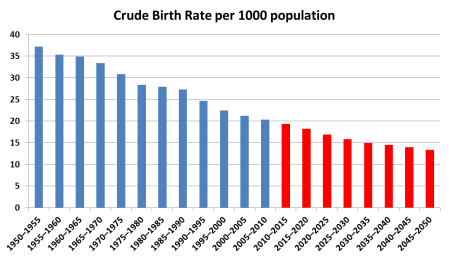

Currently the global birth rate is around 20 per 1000 of population (2%), having been around 37 in 1950 and projected to reduce to around 14 (1.4%) by 2050.

Crude birth rate actual and forecast: UN data

Of these the number conceived by artificial means (IVF and surrogacy) is probably around 1% (around 0.2 births per 1000 of population). For example for around 2% of live births in the UK in 2010 , conception was by IVF. In Europe this is probably around 1.5% and worldwide it is still less than 1%. But this number is increasing and could more than double by 2050 as IVF spreads into Asia and Africa. By 2050 it could well be that for around 3% of all live births, conception has been by “artificial” means and that there will be a much greater degree of genetic screening applied.

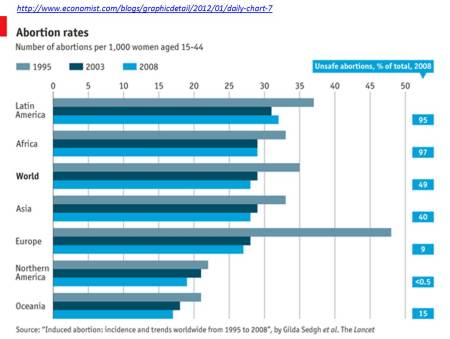

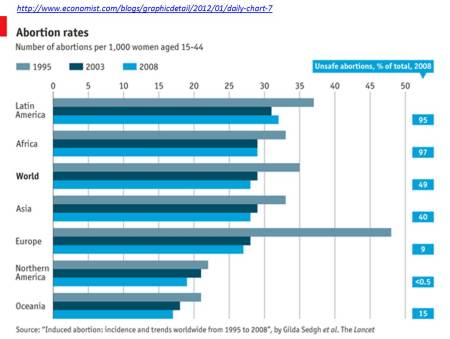

Abortion rates increased sharply after the 1950’s as the medical procedures developed to make this a routine procedure. Done properly it is a relatively risk-free procedure though there are still many “unsafe” abortions in the developing and religiously repressive countries. Since 1995 abortion rates worldwide have actually decreased from about 35 per 1000 women of child-bearing age to about 28 today. These numbers would indicate that the number of abortions taking place today is around 20-25% of the number of live births.

http://www.economist.com/blogs/graphicdetail/2012/01/daily-chart-7

Global abortion rates: graphic Economist

So of every 100 babies conceived around 25% are deselected by abortion and 75 proceed to birth. Only 1 of these 75 would have been conceived by “artificial” means. The genetic deselection by abortion is both direct and indirect. The detection of genetic defects in the foetus often leads to abortion and this proportion can be expected to increase as techniques for the early identification of defects or the propensity for developing a debilitating disease are perfected. In many cases abortion is to safeguard the health of the mother and does not – at least directly – involve any deselection for genetic reasons. In many countries – especially India – abortions are often carried out to avoid a girl child and this is a direct genetic deselection. It seems to apply particularly for a first child. The majority of abortions today are probably for convenience. But if the “maternal instinct” is in any way a genetic charateristic, then even such abortions would tend to be deselection in favour of those who do have the instinct.

The trends I think are fairly clear. The proportion of “artificial births” is increasing and the element of genetic selection by screening for desired charateristics in such cases is on the increase. The number of abortions after conception would seem to be on its way to some “stable” level of perhaps 25% of all conceptions. The genetic content of the decision to abort however is also increasing and it is likely that the frequency of births where genetic disorders exist or where the propensity for debilitating disease is high will decrease sharply as genetic screening techniques develop further.

It is still a long way off to humans breeding for specific charateristics but even what is being practised now is the start of eugenics in all but name. And it is not difficult to imagine that eugenics – without any hint of coercion – but where parents or the mothers-to-be select for certain characteristics or deselect (by abortion) to avoid others in their children-to-be will be de rigueur.